© Patrick Pollmeier

General Knowledge for Robots

From ironing to cooking – in order to function in everyday life, robots need a lot of knowledge. The KnowRob project wants to provide this.

The complexity of our everyday environment becomes very clear when we try to view it through the eyes of a robot. What is a table? Where do I find the milk bottle in the kitchen? How do I lift it? Using this information is second nature to humans. But each individual thought process is essential for a robot if it is to help us with daily tasks. Which is why researchers from the Institute for Artificial Intelligence (IAI) are developing a “KnowRob” knowledge software for robots

Who would not want to have a robot that cooks and then loads the dishwasher and does the ironing? Some of the robots at the University of Bremen’s IAI have this potential. An apartment was set up for them with a kitchen and living area so that they could learn to perform household tasks. Already equipped with the technical gear for these – sensors, cameras, and mechanical arms – the robots now most urgently need knowledge about human environments such as a kitchen. Robots are generally only given basic information during production, for example about their own equipment. In order for them to acquire more information, a team of researchers at the University of Bremen has been working since 2013 on the KnowRob software. Michael Beetz, professor for artificial intelligence in the Faculty for Mathematics and Computer Science and head of the IAI has led this project since the beginning. With the KnowRob software, researchers from Bremen want to give robots the ability to understand human commands. When given a request such as “iron the laundry” robots would be able to figure out what laundry is, where to find it, and what “ironing” means. How does this software work?

Putting Knowledge into Action – How KnowRob Works

KnowRob is based on information from databases with information about “SOMA” (Socio-physical Model of Activities), which was developed at the University of Bremen. This knowledge database provides information specific to robots about the robots themselves, as well as their tasks and the surroundings in the robotics lab, for example, in which closet the ironing board can be found and what it is used for. KnowRob also makes use of Wikidata. Similar to Wikipedia, Wikidata contains freely accessible knowledge about various topics and is machine-readable. “That helps us tremendously, since we can use existing object descriptions and don’t need to create new definitions for everything,” says Dr. Daniel Beßler, IAI researcher and one of the main developers of KnowRob.

How do robots obtain information from Wikidata and SOMA? This is where KnowRob comes into play, since it connects the databases and the robots’ surroundings. “With KnowRob the robots can compare images they take with the information from the databases. This helps them know which objects are around them and what characteristics and functions these have,” says IAI researcher and KnowRob developer Sascha Jongebloed. KnowRob also helps robots to draw their own conclusions: Using the information that milk is perishable and that a refrigerator contains perishable food, KnowRob allows robots to conclude that milk usually belongs in the fridge.

The combination of both knowledge and conclusions is still not enough to provide the robots with the ability to act. “We developed CRAM, an additional program, to do this,” Sascha Jongebloed explains. Using the knowledge from KnowRob, CRAM (short for “Cognitive Robot Abstract Machine”) derives the appropriate actions. When given the request “iron the laundry,” CRAM divides this into smaller tasks such as “go to the laundry basket,” “pick up the laundry,” “bring the laundry to the ironing board,” and “move the iron over the laundry.”

openEASE: Free Robot Knowledge for All

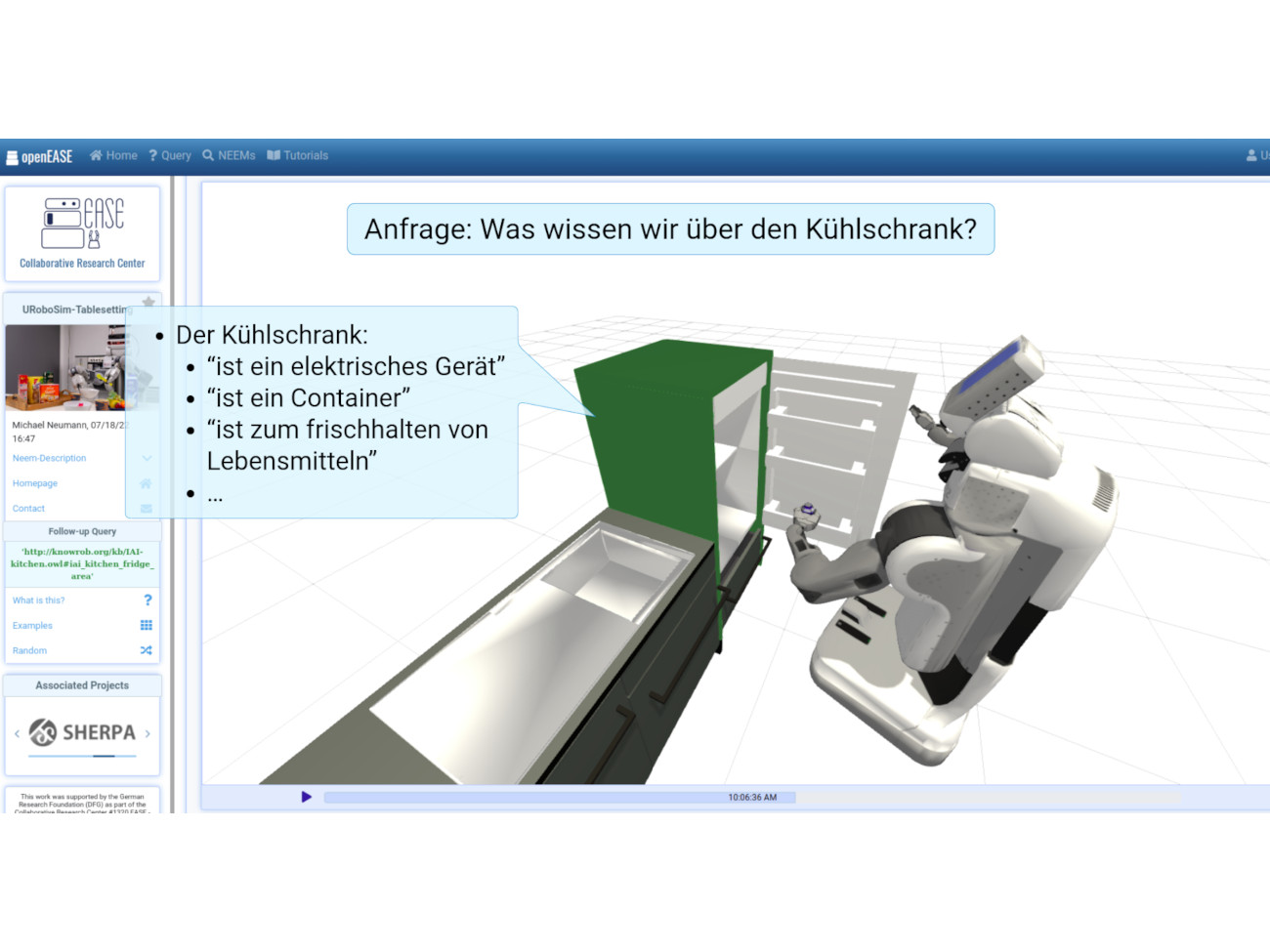

In the Bremen robotics lab, the interaction of all these software components is on display. For example, when robots make pancakes or popcorn. These experiments are not just performed by researchers here in Bremen; others from all around the world can use the digital twin lab to work with these robots. “International networking is an important objective,” Daniel Beßler emphasizes. The University of Bremen is also a member of the European Robotics Excellence Network eurROBIN, which consists of 30 research institutes and other partners from 14 countries. An important contribution of Bremen’s researchers is the open knowledge platform openEASE, which was developed as part of the Collaborative Research Center EASE “Everyday Activity in Science and Engineering.” Researchers use this to upload the documentation of robotics experiments, which are then available as open source materials worldwide. Anyone looking at the documentation can understand what information KnowRob gave the robots at any given point in time. They are shown a digital reproduction of the Bremen robotics lab. “By clicking on an individual object, you can see exactly what information the robots have been given about it and where potential gaps in knowledge remain,” says Sascha Jongebloed.

© Sascha Jongebloed / Universität Bremen

Focus on Explainable AI

Using this approach, researchers are hoping to achieve a more explainable AI: Robots should not just be able to correctly perform tasks, it should also make sense why they do this in a certain way – not just for humans who work with them, but for the robots themselves. This is why the Bremen researchers are working on a new version of KnowRob. This will provide researchers and developers with additional functions they can add to the program. One example of this is that robots will go through a simulation of situations before they act. Right now, the robots only create one path of action for each task humans give them. Humans act differently, says Sascha Jongebloed. “Even with simple tasks, for example, setting the table, we intuitively consider different ways to do this task and which one is the best in the current situation.” This ability to simulate makes robots more similar to humans – and is a further step on the path to the long-awaited household helper robots.